Mastering Your AI Assistant Evolution in Splunk: The Ultimate Guide from Beginner to LLM Pro

The Transition of AI from Hype to Reality

The buzz surrounding artificial intelligence is undeniable. In recent months, we’ve seen this fascination morph into concrete realities, particularly through emerging applications in the B2B landscape. Early experiments and pilot programs are now revealing significant business advantages.

This blog post will walk you through how to integrate Splunk data with Large Language Models (LLMs) for efficient interactions, taking cues from Zeppelin—a prominent player in the global construction machinery sales and services sector as well as in power systems, rental equipment, and plant engineering.

A Quick Head’s Up – BEFORE YOU BEGIN

Once you finish reading this blog, you might be excited to kickstart your own project. But, wait a moment! Prior to jumping in, it’s vital to define a project scope that covers the following aspects:

- The objectives and aims of your AI venture,

- The business benefits it will deliver to your organization,

- The data requirements needed,

- The expected data flow.

Taking this initial step establishes a foundation for validating your initiatives, setting realistic expectations, and addressing data privacy and security concerns. These elements will determine which components you’ll have access to. To deepen your insights, consider exploring AI security reference architectures from Cisco Robust Intelligence, which include secure design patterns and practices relevant to chatbots, Retrieval-Augmented Generation (RAG) applications, and AI agents. For example, you can connect directly to a cloud-hosted LLM or utilize an intermediary to implement security features, including monitoring as provided by Cisco Robust Intelligence. Alternatively, opting for a pre-trained model in an isolated environment hosted on-premises or deploying a custom LLM in a container linked to the Splunk App for Data Science and Deep Learning (DSDL) could also be viable options.

Crafting the Business Case

Before we explore the architectural details that can be adapted for your project, let’s take a look at a successful case developed by Florian Zug at Zeppelin. The objective was to create an AI assistant designed to help employees access pricing information related to used machinery. This requires ongoing retrieval of listings from various global platforms selling second-hand equipment. The AI, managed through Splunk, should be capable of delivering the necessary information with minimal Splunk knowledge needed from its users. Unlike traditional Splunk visualizations that restrict answers to predefined queries through dashboards or the Splunk React UI, an AI assistant eliminates such limitations. For example, a user could ask: “What has been the price trend for the Caterpillar MH3022 in Germany over the last six months?”

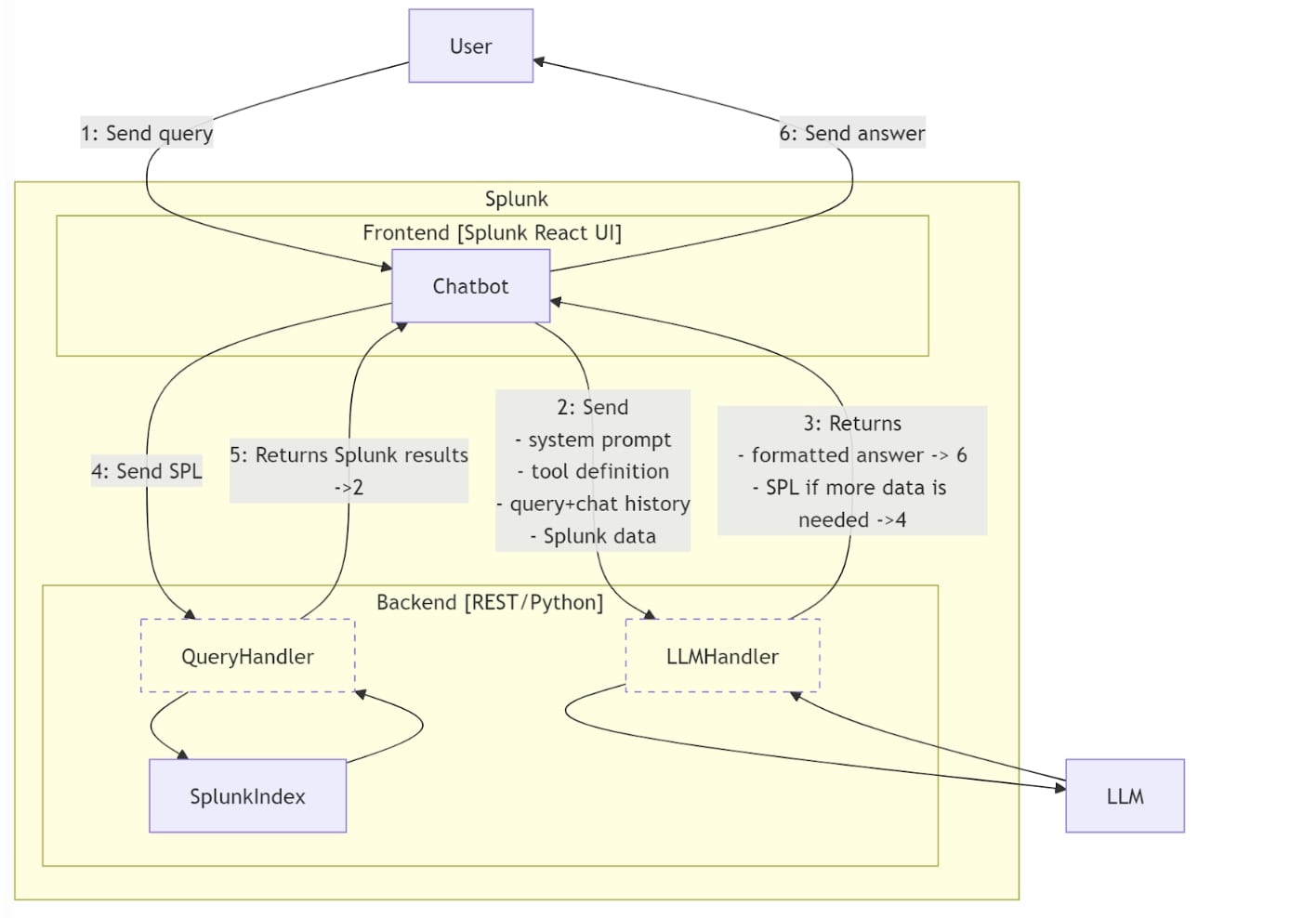

Understanding the Architecture and Data Flow

Grasping the architectural framework and its components is paramount:

- Chatbot: Manages the front-end interaction logic using the Splunk React UI framework, hosted within Splunk.

- QueryHandler: A lightweight proxy that sends SPL queries to Splunk under a limited user context (restricted access to only required indexes) and retrieves data from Splunk.

- LLMHandler: Manages LLM credentials apart from the front end, processing requests and responses to and from the LLM.

- SplunkIndex: The Splunk index that contains data related to used machinery offerings.

The Interaction Flow:

- The user asks a query through the Chatbot within Splunk.

- The Chatbot sends a request to the LLM system (e.g., Anthropic Claude) through the LLMHandler, which includes:

- The user’s question

- System prompt (describing the chatbot’s capabilities to query used machinery data via Splunk)

- Tool definition (indicating that the LLM should return SPL when Splunk data is needed, along with a description of the corresponding Splunk index, its fields, and values)

- Chat history (complete conversation history)

- Splunk data (if previously queried)

- The LLM either provides a formatted answer (proceed to step 6) or generates an SPL to fetch the required information.

- If an SPL is created, it is sent to Splunk through the QueryHandler.

- The results are processed and sent back to the Chatbot, which then returns to step 2 with the updated data.

- If the LLM gives a complete response, the Chatbot presents that to the user.

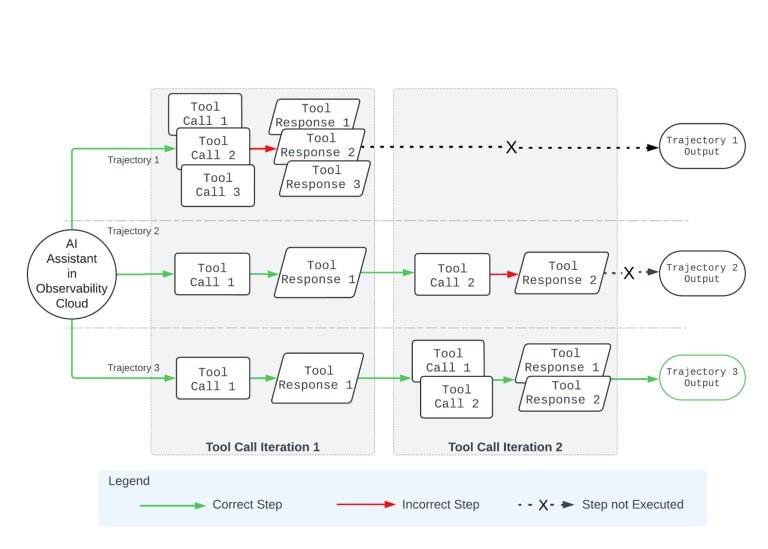

If the SPL query does not return any results, the LLM is capable of refining the queries or expanding search parameters. For instance, if a user inquires about available offers for a certain machine in Germany but doesn’t get data from Splunk, the LLM may revise the query to consider offers from Europe or even globally.

In-Depth Flow and User Experience:

Throughout the interaction, users have the option to request insights into the specific Splunk query that was generated based on their inquiry.

A heartfelt thank you goes out to Florian for his incredible contributions and enthusiasm. Remember, sharing knowledge is a powerful tool!