Explore the Cutting-Edge LLM-RAG Features of Splunk DSDL 5.2 and Their Practical Uses

Background

With rapid advancements in large language models (LLMs), organizations are faced with two pressing questions: how can they safely harness LLM services, and how can these technologies deliver relevant and accurate responses tailored to their unique business needs? Currently, the majority of LLM solutions are provided as Software-as-a-Service (SaaS) applications, which require customer data and queries to flow through public cloud infrastructures. This creates significant challenges for many organizations aiming to implement LLMs in a secure manner. Moreover, since LLMs rely on pre-existing training data to generate outputs, producing contextually relevant answers that address specific client situations becomes increasingly difficult without access to current information or internal knowledge assets.

To effectively address the first challenge, organizations may want to consider deploying LLMs within an on-premises infrastructure that allows for comprehensive data governance. For the second challenge, retrieval-augmented generation (RAG) has emerged as a promising solution. RAG utilizes customizable knowledge bases as additional contextual information to improve LLM responses. Generally, information sources like internal documents and system logs are vectorized and stored in a vector database (vectorDB). When a query is executed, relevant knowledge is retrieved through vector similarity searches and integrated into the context of the LLM prompt, aiding the generation process.

LLM-RAG in Splunk DSDL 5.2

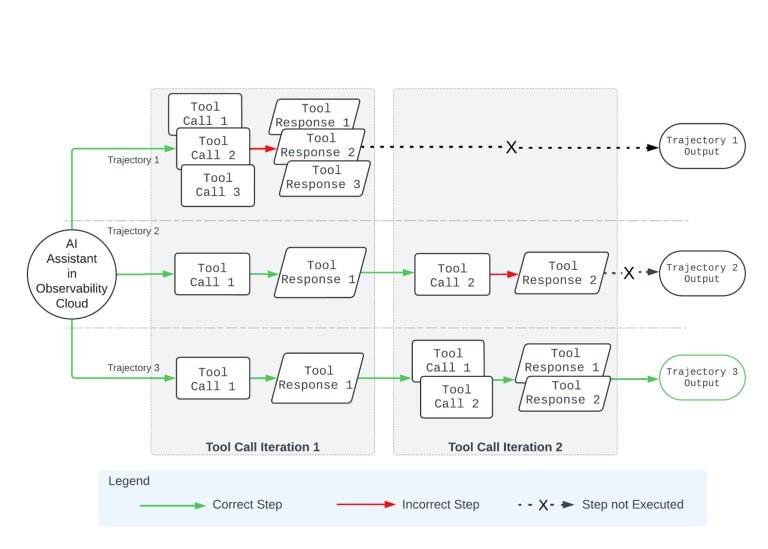

In this latest update, we have significantly upgraded the Splunk App for Data Science and Deep Learning (DSDL) by incorporating an on-premise LLM-RAG architecture (as illustrated in Fig. 1). This release features a variety of commands and dashboards that make accessing the capabilities of the Splunk DSDL app seamless and intuitive. Our system is built around three key modules: an Ollama module for local LLM services, a Milvus module for managing local vectorDB, and a DSDL container that incorporates the LlamaIndex framework, facilitating smooth integration between the LLM and vectorDB modules for RAG functions.

Through this architecture, we’ve expanded the functionality of Splunk DSDL to encompass the following four use cases:

- Standalone LLM: utilizing the LLM for zero-shot Q&A or natural language processing tasks.

- Standalone VectorDB: leveraging VectorDB to encode Splunk data and conduct similarity searches.

- Document-based LLM-RAG: encoding documents—such as internal knowledge bases or past support tickets—into VectorDB as contextual resources for LLM outputs.

- Function-Calling-based LLM-RAG: implementing functional tools in the Jupyter notebook that the LLM can execute automatically, enriching the context for its output.

Below, we introduce each of these four use cases with brief descriptions and example outputs.

Use Case 1: Standalone LLM

The standalone LLM excels at handling Q&A tasks using data sourced from Splunk. For example, we can categorize text data inputted into Splunk via the dashboard in Splunk DSDL 5.2. Initially, a search is conducted in Splunk to obtain the required text messages. Subsequently, within the settings panel, the LLM model is chosen, and the following prompt is entered into the appropriate text box:

Categorize the text. Choose from Biology, Math, Computer Science, and Physics. Please provide only the category name in your response.

After clicking the “Run Inference” button, the local LLM receives the text data along with the prompt. Once the generation is complete, the resulting outputs, including their respective runtime durations for each entry, are displayed in the output panel.

Use Case 2: Standalone VectorDB

In DSDL 5.2, built-in dashboards facilitate encoding Splunk data into VectorDB collections and performing similarity searches. In the example provided, an error log from the Splunk _internal index is linked with previous logs housed in a VectorDB collection via vector search based on cosine similarity. The selected log entry (“Socket error … broken pipe”) has successfully matched with the top four similar log entries stored in the Internal_Error_Logs collection, as configured in the settings panel.

Use Case 3: Document-based LLM-RAG

DSDL 5.2 introduces dashboards capable of encoding documents from the Docker host into VectorDB collections for use in LLM generation. In this scenario, we’ve previously encoded documents related to the Buttercup online store into a vector collection named Buttercup_store_info. On the LLM-RAG dashboard, we select the collection and the local LLM, then enter this query:

Customer [email protected] encountered a Payment processing error while checking out on the product page for DB-SG-G01. Please answer the following questions:

- List of employees responsible for supporting this product.

- What were the resolution notes on past tickets with identical issue descriptions?

After clicking the “Query” button, the query is sent to the container environment, where relevant documents from the collection are automatically retrieved and included as contextual data before being forwarded to the LLM. The resulting response from the LLM appears in the output panel alongside the documents referenced.

Use Case 4: Function-Calling-based LLM-RAG

Another way the LLM can obtain additional contextual information is through the execution of functional tools, a method known as Function Calling. This approach allows for significant user customization, and it is highly recommended for users to develop their own tools using Python tailored to their specific needs. In DSDL 5.2, we demonstrate two example function tools: Search Splunk Event and Search VectorDB.

In the following example, the LLM is presented with the query:

Search Splunk events in index

_internaland sourcetypesplunkdfrom 510 minutes ago to 500 minutes ago containing the keyword Socket error; also seek similar records from VectorDB collectionphoebe_log_messageand inform me about the possible issues.

In response to the query, the LLM automatically uses the two defined function tools to gather results from both the Splunk index and VectorDB collections. These results then inform the LLM’s final answer. In this sample dashboard, the LLM’s response is exhibited in the output panel, along with the execution results from both functional tools.

Conclusion

In this latest 5.2 release of Splunk DSDL, we introduce the aforementioned LLM-RAG functionalities, designed to be adaptable and extendable for a range of use cases. For more detailed information, please refer to the documentation.

Note: The inception of this project stemmed from a rotation within the GTS Global Architect team, driven by customer demands. Thanks are due to my exceptional colleagues, whose collaborative efforts in research, development, and refinement have been invaluable.