Explore the Top 10 Groundbreaking Topics at the 2024 Data Engineering Summit

Conferences are more than just speakers standing at podiums; they embody the latest trends, issues, and topics that resonate with our everyday experiences. The upcoming Data Engineering Summit, hosted alongside ODSC East from April 23rd to 24th, will delve into several crucial subjects designed to propel your data engineering team toward success. Here are ten key topics set to be explored at this year’s Data Engineering Summit.

Is Gen AI A Data Engineering or Software Engineering Problem?

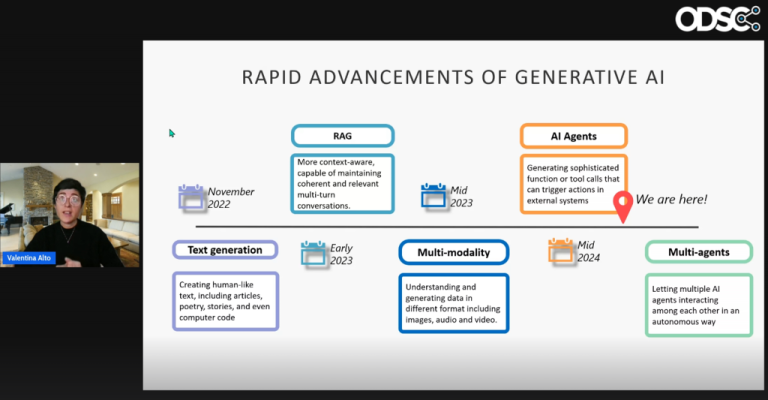

Generative AI is not exclusively a challenge for either data engineering or software engineering; it demands a collaborative approach that involves both fields. Data engineers are responsible for preparing training datasets, while software engineers focus on developing and constructing the models. Consequently, managing generative AI requires a coordinated effort where teams must strategically choose the aspects of the generative AI pipeline they will focus on to avoid it becoming a shared dilemma for all.

Related Session: Is Gen AI A Data Engineering or Software Engineering Problem?: Barr Moses, Co-Founder & CEO at Monte Carlo

Data Infrastructure

Data engineering teams often encounter numerous challenges, such as integrating data from multiple sources into coherent formats, expanding systems to manage increasing data loads, and ensuring the integrity of data security and compliance. Additionally, they face the burden of technical debt caused by past shortcuts while striving to maintain data quality to produce reliable outcomes.

Related Session: Data Infrastructure through the Lens of Scale, Performance and Usability: Ryan Boyd, Co-founder of MotherDuck

Foundation Models

Foundation models represent a revolutionary shift in the world of AI. Trained on vast and diverse datasets, these models act as versatile AI tools that can be adapted and fine-tuned for various applications, from language processing to image generation. This versatility is expanding the horizons of AI capabilities significantly.

Related Session: From Research to the Enterprise: Leveraging Foundation Models for Enhanced ETL, Analytics, and Deployment: Ines Chami, Co-founder and Chief Scientist at NUMBERS STATION AI

Data Contracts

A data contract can be seen as a formal agreement for data sharing. It sets clear expectations between the provider and consumer concerning the data’s structure (format), meaning (definitions), quality (standards), and delivery (frequency, access). This agreement guarantees that all parties involved communicate in the same “data language.”

Related Session: Building Data Contracts with Open Source Tools: Jean-Georges Perrin, CIO at AbeaData

Semantic Layers

Semantic layers enhance data analysis by interpreting complex data frameworks and translating them into business-friendly terminology. This leads to a cohesive view of data sourced from various origins, empowering users to make informed, data-driven decisions.

Related Session: The Value of A Semantic Layer for GenAI: Jeff Curran, Senior Data Scientist at AtScale

Unstructured Data

Unstructured data comprises information that trickles outside the confines of traditional formats, such as spreadsheets. Picture a chaotic mix of documents, emails, videos, and social media interactions. While this data holds immense potential, it often proves challenging for systems to analyze without the aid of sophisticated strategies.

Related Session: Unlocking the Unstructured with Generative AI: Trends, Models, and Future Directions: Jay Mishra, Chief Operating Officer at Astera

Monolithic Architecture

In the realm of software development, a monolithic architecture refers to the conventional model where the entire application is conceived as one unified entity. Visualize it as a colossal rock where every component is interconnected and inseparable, encompassing everything from the user interface to the underlying data storage.

Related Session: Data Pipeline Architecture – Stop Building Monoliths: Elliott Cordo, Founder, Architect, and Builder at Datafutures

Experimentation Platforms

Experimentation platforms serve as powerful tools enabling the execution of A/B tests across websites, applications, or marketing endeavors. By creating variations of elements to test (like layout or pricing) and presenting them to distinct user segments, these platforms evaluate the outcomes to identify the most effective option. They are essential for fostering data-driven decision-making and enhancing product efficacy.

Related Session: Experimentation Platform at DoorDash: Yixin Tang, Engineer Manager at DoorDash

Open Data Lakes

An open data lake champions accessibility and adaptability. It retains data in vendor-agnostic formats while utilizing open standards to facilitate easier access and collaboration, thereby steering clear of vendor lock-in. Imagine it as a public park for your data compared to a secluded, private garden.

Related Session: Dive into Data: The Future of the Single Source of Truth is an Open Data Lake: Christina Taylor, Senior Staff Engineer at Catalyst Software

Data-Centric AI

Data-centric AI challenges the conventional model by concentrating on enhancing data quality (labeling, cleaning, augmentation) rather than merely focusing on algorithms. This rehabilitative cycle ensures continuous data improvement, leading to superior AI performance. A fitting analogy is constructing a house: even the finest tools can’t build a sturdy structure with subpar materials. Data-centric AI ensures robust data underpins reliable AI outputs.

Related Session: How to Practice Data-Centric AI and Have AI Improve its Own Dataset: Jonas Mueller, Chief Scientist and Co-Founder at Cleanlab

Sign me up!

For data engineering professionals, remaining at the forefront of industry advancements is vital for success. Join us at ODSC’s Data Engineering Summit and ODSC East to stay updated with the latest in data engineering innovations. Attending the Data Engineering Summit on April 24th, co-located with ODSC East 2024, will position you at the cutting edge of upcoming changes. Secure your pass today and ensure you remain ahead of the curve.

Session: Crafting Data Contracts with Open Source Tools: Insight from Jean-Georges Perrin, CIO at AbeaData

Semantic Layers

A semantic layer streamlines data analysis by converting intricate data models into business-friendly terminologies, showcasing a cohesive view from diverse data sources. This capability empowers users and cultivates informed, data-driven decision-making.

Related Session:

The Importance of a Semantic Layer for GenAI: Jeff Curran, Senior Data Scientist at AtScale

Unstructured Data

Unstructured data refers to information that does not conform to a standard format, like that of spreadsheets. Picture it as a chaotic collection of documents, emails, videos, and social media postings. While this type of data holds significant value, its unorganized nature makes it challenging for computers to analyze directly.

Related Session:

Transforming the Unstructured with Generative AI: Trends, Models, and Future Directions: Jay Mishra, Chief Operating Officer at Astera

Monolithic Architecture

Within software development, monolithic architecture represents a conventional approach in which an entire application is designed as a singular, integrated unit. Visualize it as an enormous, solid rock—each component, including the user interface, core business logic, and data storage, is interconnected and inseparable.

Related Session:

Data Pipeline Architecture – Avoiding Monolithic Designs: Elliott Cordo, Founder, Architect, and Builder at Datafutures

Experimentation Platforms

An experimentation platform serves as a tool designed for conducting A/B tests across websites, applications, or marketing initiatives. You can create different variations of elements you want to evaluate (like new layouts or pricing strategies), and the platform presents these variations to distinct user groups, analyzes the outcomes, and reveals which option performs best. This approach fosters data-driven decisions and enhances product efficiency.

Related Session:

Experimentation Platform at DoorDash: Yixin Tang, Engineer Manager at DoorDash

Open Data Lakes

An open data lake champions accessibility and adaptability, storing data in vendor-independent formats and adhering to open standards for seamless access and collaboration, thereby steering clear of dependency on particular vendors. Think of it as a communal park for your data, contrasting with a private, enclosed garden.

Related Session:

Dive into Data: Envisioning the Future of the Single Source of Truth with Open Data Lakes: Christina Taylor, Senior Staff Engineer at Catalyst Software

Data-Centric AI

Data-centric AI reshapes the standard paradigm. Rather than prioritizing models, it emphasizes the significance of quality data—through processes like labeling, cleaning, and augmentation—to refine these models. This iterative cycle works to continuously enhance data quality, which in turn yields superior AI performance. Imagine constructing a house; even the best tools cannot compensate for subpar materials. Data-centric AI ensures robust data serves as a strong foundation for dependable AI.

Related Session:

Practicing Data-Centric AI and Enhancing AI’s Own Dataset: Jonas Mueller, Chief Scientist and Co-Founder at Cleanlab

Sign Me Up!

Data engineering professionals understand that staying ahead in the dynamic field of data requires keeping abreast of the latest trends and developments. The most effective way to achieve this is by participating in ODSC’s Data Engineering Summit and ODSC East.

At the upcoming Data Engineering Summit on April 24th, co-located with ODSC East 2024, you will gain insights into the pivotal changes set to shape the future of data engineering before they arrive. Ensure your spot today and position yourself at the forefront of innovation.