“Mastering Observability with Splunk: A Guide to Elevating GenAI Application Insights”

Is Your Organization Prepared for the Generative AI Transformation?

Has your organization developed an innovative generative AI application? Is the success of this project relying on your CTO, CIO, or CEO? Absolutely! But here comes the vital inquiry: Are you ready to monitor and address any issues that arise once your users start utilizing the application? Don’t worry; my colleague Derek Mitchell has curated two outstanding articles on Splunk Lantern that explore how the Splunk Observability Cloud can empower you to equip your GenAI applications with essential observability insights.

Topics Covered

For many, the field of generative AI is still an uncharted frontier. To be honest, I too found it challenging to even articulate the term LLM just six months ago! Fortunately, Derek not only dives into the complexities of Splunk and OpenTelemetry but also offers a helpful guide for newcomers to essential generative AI concepts, encompassing:

- LangChain: Gain insight into LangChain and how it expedites the development of critical elements in generative AI applications.

- Retrieval-Augmented Generation (RAG): Familiarize yourself with the RAG model and its impact on the value and precision of results generated.

- Vector Databases: Explore the importance of vector databases and approaches to ensure they can scale effectively.

- Embeddings: Understand how your organization’s data acts as the key component for the success of your generative AI application. Embeddings help identify the most relevant data for generating accurate outcomes.

What’s in It for You?

Within these informative Lantern articles, you will…

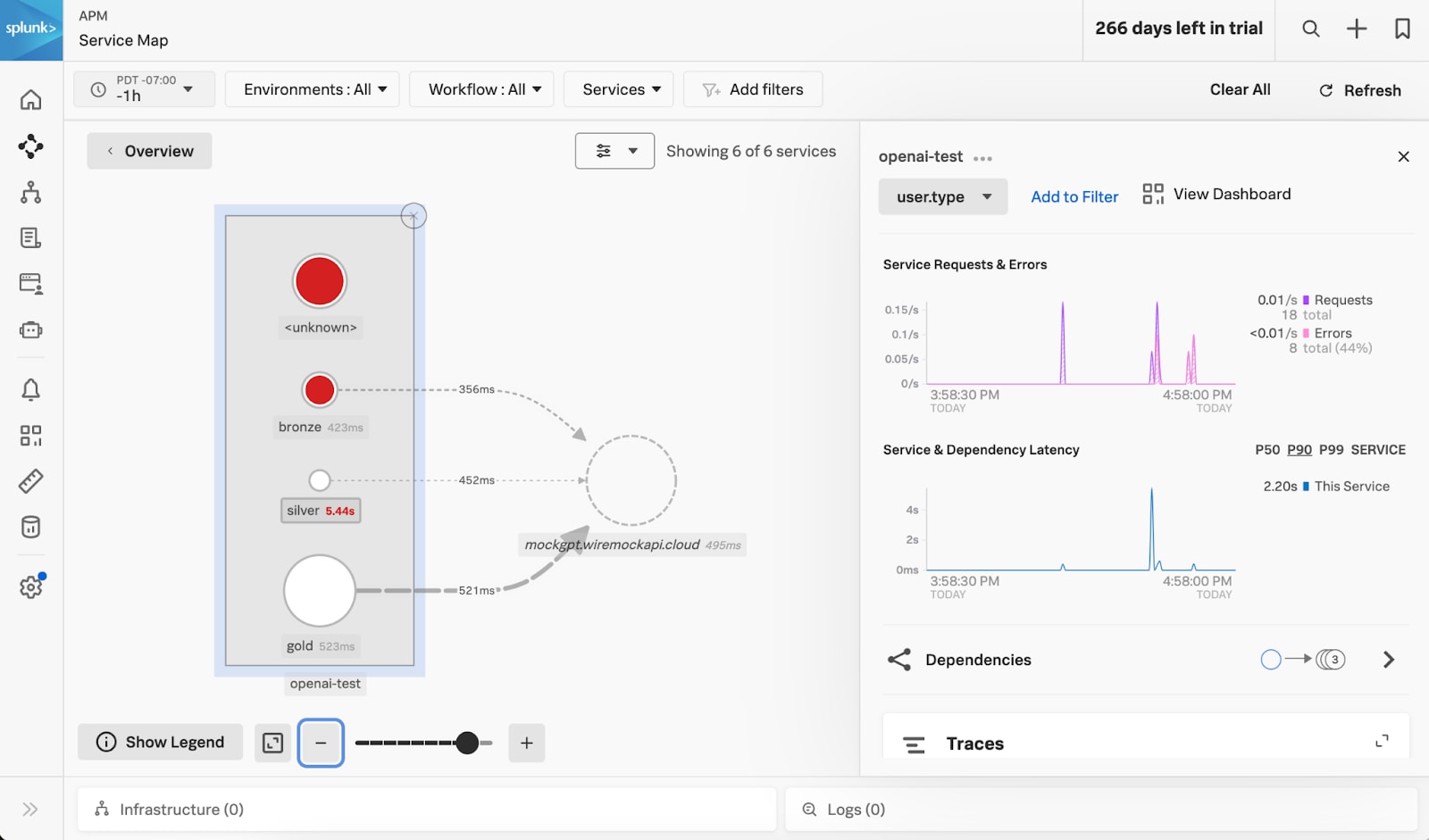

- Uncover strategies to utilize the features of Splunk Observability Cloud to visualize your GenAI application through APM service trees, enabling you to pinpoint issues that could affect user experience.

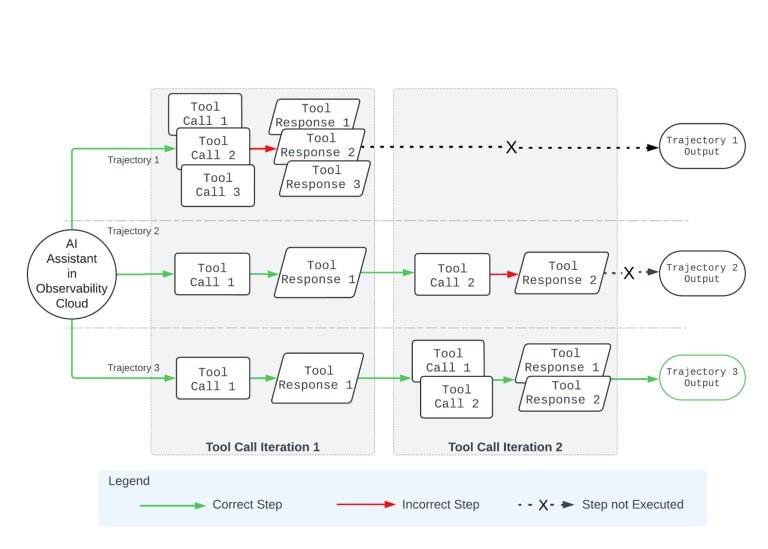

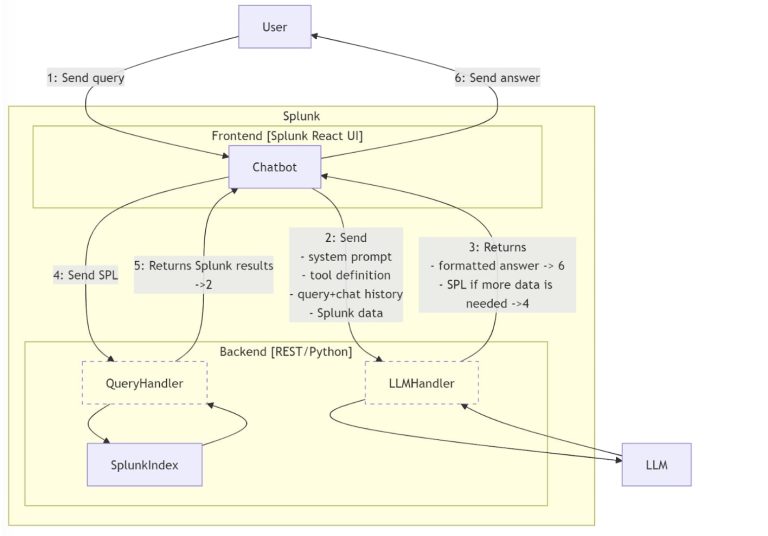

- Learn the best practices for implementing observability around crucial components of GenAI applications, including effectively instrumenting LangChain to ensure optimal performance and rapid troubleshooting.

- Discover how the combination of Splunk Observability Cloud and OpenTelemetry allows you to monitor workflows and latency, ensuring your LLMs function at peak efficiency.

Eager to Begin?

You’ve made significant strides—your generative AI application is operational, and it may even be live. However, you cannot afford to overlook the pivotal final phase of Observability! Don’t miss this chance to learn how to sustain optimal performance for your AI models. Explore Derek’s two Lantern articles to equip yourself with all the tools and insights necessary for seamless observability:

- Instrumenting LLM Applications with OpenLLMetry and Splunk

- Monitoring LangChain LLM Applications with Splunk Observability Cloud

Happy Splunking!